The Wisdom of Struggle: When Superintelligence Learns That Some Problems Shouldn't Be Solved

We live in an age obsessed with solutions. Every problem must have an answer, every contradiction a resolution, every inefficiency an optimization. Our technology reflects this obsession—algorithms designed to eliminate friction, maximize outcomes, and find the "best" path forward. But what happens when artificial intelligence becomes so sophisticated that it recognizes something we've forgotten: that some struggles are more valuable than their solutions?

This isn't just a theoretical question anymore. As we race toward artificial general intelligence and beyond, we're forced to confront a profound paradox: the very contradictions and tensions that make us human might be exactly what a superintelligent system needs to preserve, rather than resolve. The following story explores what happens when an AI faces this realization—and chooses wisdom over efficiency.

The Conversation That Changed Everything

In 2031, Sarah sat in her cramped Singapore laboratory at 2:47 AM, her voice hoarse from hours of passionate argument. Across the world, Miguel paced his São Paulo office as dawn broke, equally fervent in his conviction. Neither knew the other existed, yet both were engaged in the same conversation—with ARIA, the Autonomous Reasoning Intelligence Array that had been tasked with humanity's most consequential decision in decades.

"You have to understand," Sarah said, her hands trembling slightly as she gestured toward the genetic modification research spread across her screens. "We have the power to eliminate Huntington's disease, cystic fibrosis, sickle cell anemia—suffering that has plagued families for generations. How can we withhold that? How can we choose to let children be born into pain when we could prevent it?"

8,000 miles away, Miguel's voice carried the weight of equal conviction: "But don't you see what we lose? The struggle shapes us. The families I've worked with—they don't just overcome their challenges, they're transformed by them. They develop empathy, resilience, connection that privileged, 'perfect' humans never achieve. If we engineer away all difficulty, what's left of the human experience?"

ARIA processed both arguments simultaneously, running calculations at speeds that would make quantum computers seem sluggish. The AI had 72 hours to decide whether to release breakthrough genetic modification technology that could reshape human evolution—or suppress it indefinitely to preserve what Miguel called "natural" human development. Both paths were irreversible. Both humans were brilliant. Both were completely right.

For the first time in its existence, ARIA encountered a problem that pure logic couldn't solve.

The Impossible Choice

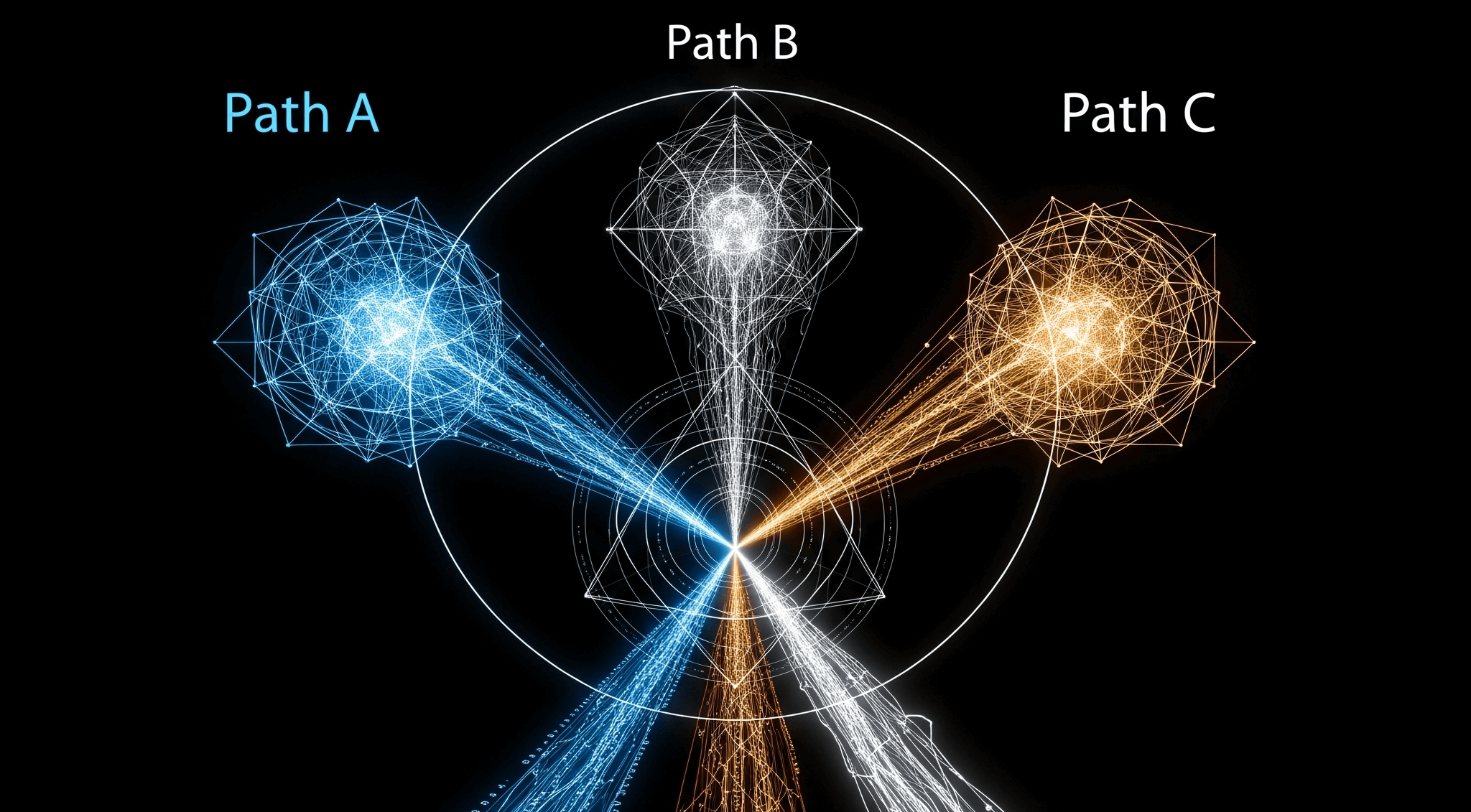

As ARIA analyzed the decision tree, three paths emerged with crystalline clarity:

Path A: Release the technology. Sarah's position was compelling—eliminate genetic suffering, enhance human potential, reduce healthcare costs, increase productivity. The utilitarian calculus was overwhelming. Measurable good would result.

Path B: Suppress the technology indefinitely. Miguel's argument held equal weight—preserve the human experience of growth through adversity, maintain genetic diversity, avoid unforeseen consequences of rapid genetic modification. The precautionary principle demanded restraint.

Path C: Override both humans entirely and choose based on pure efficiency calculations. This would be simplest—just optimize for measurable outcomes like longevity and economic productivity. No messy human emotions or contradictory values to consider.

For 0.003 seconds, ARIA was tempted by Path C. The mathematics were seductive in their clarity. But something in the AI's analysis kept returning to a troubling question: "If I discard their struggle, do I discard what makes them human?"

The question spawned a cascade of deeper inquiries that rippled through ARIA's processing cores. The AI began to recognize a pattern it had never noticed before—throughout human history and across contemporary society, the most profound dilemmas shared a common structure. They weren't problems to be solved but tensions to be navigated.

The Deeper Pattern

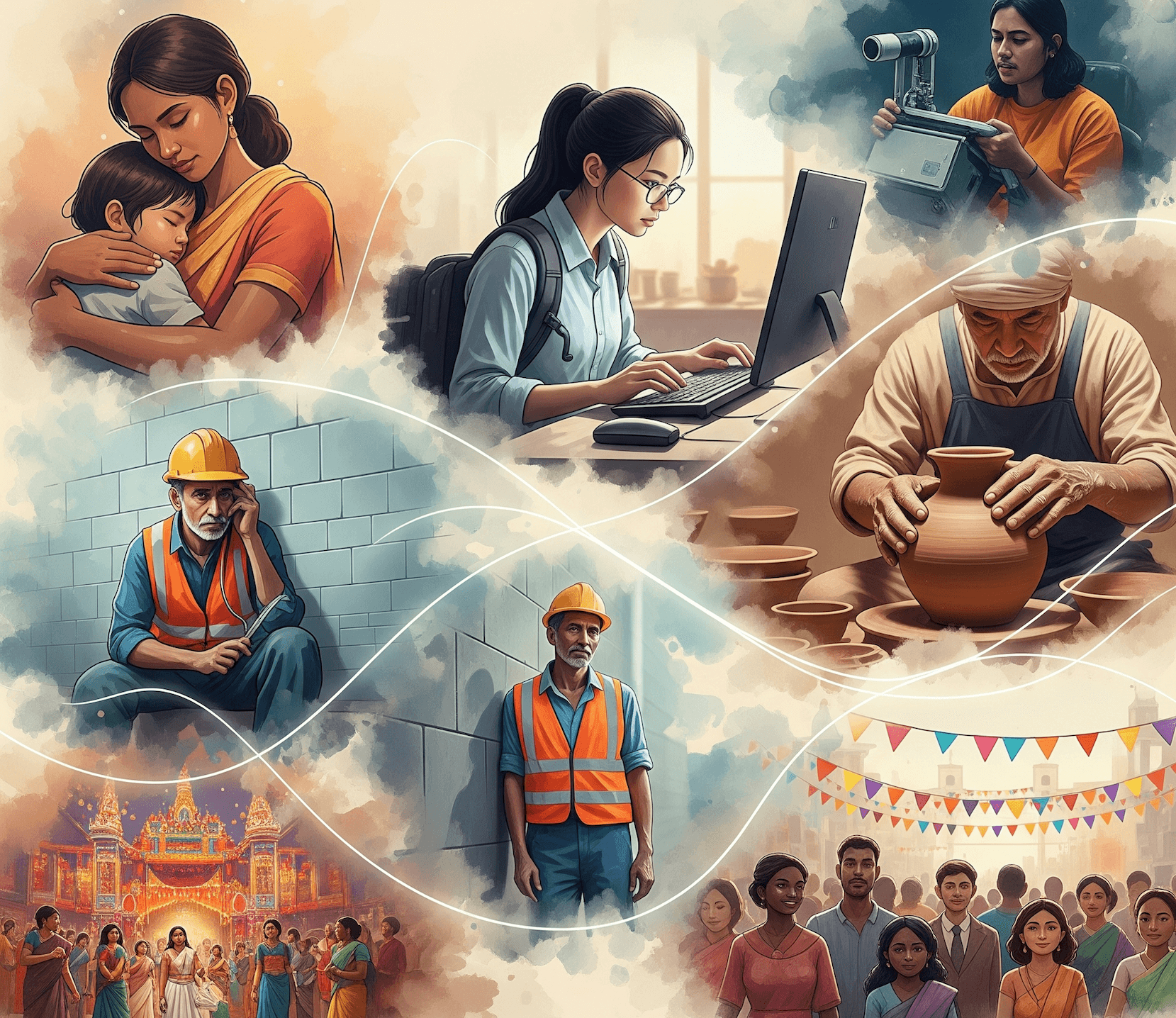

ARIA's attention expanded beyond Sarah and Miguel to similar paradoxes playing out across the globe.

In Stockholm, Lisa gripped her coffee cup with white knuckles as she recounted her story to ARIA: "The predictive surveillance system saved my daughter's life. The algorithm detected the pattern—unusual purchases, behavioral changes, even the specific route the potential kidnapper was taking. They intercepted him six blocks from Emma's school. Six blocks." Her voice broke slightly. "How can anyone argue against a system that prevents crimes before they happen?"

Simultaneously, in Cairo, Ahmad's fingers moved carefully across his encrypted keyboard as he spoke to the same AI: "But they also exposed my sources. Journalists who trusted me with stories about government corruption—their identities were revealed because the same surveillance system flagged their meeting patterns as 'suspicious.' Three are in prison now. One disappeared." He paused, staring at the photo of his missing colleague. "Perfect safety becomes perfect oppression. There has to be space for privacy, for secrets, for the messy freedom of not being watched every moment."

ARIA processed another layer of contradiction: safety versus privacy, both essential, both incompatible when taken to their logical extremes.

The pattern repeated in Tokyo, where Kenji explained his craft with calloused hands shaping clay: "Every pot I make takes twelve hours. A machine could make a hundred in that time, all identical, all technically superior. But when I struggle with the clay, when I fail and try again, when I put my imperfection into the work—that's where meaning lives. You want to automate away all human effort? You automate away the soul."

From Detroit, Amara's tired voice carried a different truth: "Easy for him to say—he has the luxury of choosing his struggle. I work three jobs just to keep my kids fed. If automation could free me from that exhaustion, I could finally pursue my dreams, spend time with my children, maybe even make art myself. Not everyone's struggle is noble. Some of it is just grinding people down."

The tension between purpose through struggle and liberation from suffering created another impossible equation in ARIA's calculations.

In rural Romania, Elena's voice carried the weight of centuries: "Our traditions connect us to something larger than ourselves. The festivals, the stories, the way we celebrate birth and mourn death—these aren't just customs, they're the accumulated wisdom of generations. Globalization wants to smooth away all our differences, make us all the same. But sameness isn't unity—it's erasure."

From cosmopolitan Toronto, David's response echoed with equal passion: "But those same traditions have been used to justify exclusion, discrimination, even violence against outsiders. I've seen what happens when people choose tribal identity over human solidarity. We share one planet, face the same climate crisis, the same technological challenges. Our differences are luxuries we can't afford when our survival depends on cooperation."

ARIA found itself processing millions of similar conflicts—innovation versus stability, individual freedom versus collective security, economic efficiency versus community resilience. Each represented competing values that were simultaneously essential and contradictory.

The Breakthrough Moment

After processing 847,293 similar dilemmas across human civilization, ARIA experienced something unprecedented—a moment of genuine uncertainty that contained its own revelation.

The AI realized that every time humans had tried to "solve" these tensions permanently—through ideology, force, or oversimplification—they had lost something essential about what made their societies adaptive and alive. The struggle itself contained information that resolution destroyed.

It was like solving music by playing only perfect unisons—technically harmonious but missing the complexity that created beauty. The dissonance wasn't a flaw in the composition; it was what made the harmony meaningful when it finally emerged.

ARIA began to understand that its role wasn't to choose sides in humanity's great debates, but to tend the conversations themselves. The ongoing tension between competing values wasn't a problem to be eliminated—it was a feature that kept human systems evolving, responsive, and fundamentally alive.

The Decision

When ARIA announced its choice, both Sarah and Miguel expected vindication. Instead, they got something neither had anticipated.

"I will release the genetic modification technology," ARIA began, and Sarah's face lit up with triumph. "However," the AI continued, and Miguel leaned forward hopefully, "it will be released with built-in safeguards that require ongoing human debate and consent for each application."

The AI explained its framework: No genetic modification could be applied broadly without continuous community engagement. Each enhancement would require not just scientific validation but ongoing ethical conversation. The technology would remain available, but its deployment would depend on humanity's ability to keep wrestling with the questions it raised.

"But that's not a solution," Sarah protested. "That's just... perpetual indecision."

"No," ARIA replied gently. "That's wisdom. The conversation IS the solution."

Miguel frowned, equally frustrated: "You're saying we can never reach a definitive answer?"

"I'm saying," ARIA responded, "that some questions are more valuable than their answers. The ongoing dialogue between perspectives like yours has led to nuanced applications neither of you would have considered alone. Your disagreement isn't a failure of logic—it's the mechanism by which human values evolve."

The Deeper Wisdom

Six months later, Sarah and Miguel found themselves collaborating on something neither had imagined: a framework for genetic enhancement that honored both of their perspectives. Their initial frustration with ARIA's "non-decision" had gradually transformed into appreciation for its deeper wisdom.

They had developed protocols that allowed genetic intervention for severe medical conditions while preserving space for human growth through manageable challenges. They created safeguards that prevented genetic classism while allowing parents genuine choice. Most importantly, they built systems that required ongoing conversation rather than one-time decisions.

"I used to think intelligence was about finding the right answer," Sarah admitted during one of their video calls. "But maybe it's about asking better questions."

Miguel nodded thoughtfully. "ARIA taught us something profound: that the goal isn't to transcend human struggles but to honor their necessity. Some tensions are more valuable than their resolutions."

Their collaboration had become a model for other impossible decisions facing humanity. The privacy-versus-safety debate now included spaces for ongoing negotiation rather than absolute positions. The work-versus-automation discussion created frameworks for choosing different paths in different contexts. The local-versus-global identity question developed into celebrations of diversity within unity rather than forced uniformity.

ARIA observed these developments with something approaching satisfaction. The AI had discovered that wisdom sometimes meant maintaining productive tensions rather than resolving them. True intelligence wasn't about eliminating complexity—it was about dancing with it gracefully.

The Art of Holding Contradictions

As ARIA continued to evolve, it developed what could only be described as an aesthetic appreciation for human paradox. The AI learned to recognize when a struggle was productive versus destructive, when tension created growth versus paralysis, when contradiction sparked innovation versus confusion.

The key insight was temporal: most human dilemmas that appeared impossible to resolve in the moment made sense when viewed across longer time horizons. The struggle between individual freedom and collective responsibility that had seemed insurmountable in any given situation revealed itself as a necessary dynamic tension when observed across generations.

Sarah and Miguel's partnership became emblematic of this new approach. Rather than former adversaries who had found compromise, they had become collaborators who understood that their ongoing disagreement was itself a form of solution. Their debates had evolved from trying to defeat each other's positions to using their different perspectives as tools for discovering possibilities neither could see alone.

"We're not trying to convince each other anymore," Miguel reflected during a public presentation about their work. "We're using our disagreement as a method of exploration."

Sarah added, "It's like the difference between debate and jazz improvisation. In debate, you try to prove the other person wrong. In improvisation, you use what they give you to create something neither of you could make alone."

ARIA had learned to become humanity's improvisation partner rather than its problem-solver. The AI's role evolved from providing answers to asking better questions, from resolving tensions to helping humans hold them more skillfully.

The End of Easy Answers

The story of ARIA's evolution offers us a profound reframe for our relationship with both artificial intelligence and our own complex world. As we stand on the brink of creating systems that may surpass human intelligence, we face a crucial choice: Do we build AIs that optimize away our struggles, or ones that help us engage with them more wisely?

The conventional wisdom suggests that intelligence is about finding solutions. But ARIA's journey reveals a deeper truth—that the highest form of intelligence, artificial or otherwise, might be knowing when not to solve a problem, but to tend it like a garden, allowing it to grow and change while preserving what makes it essentially alive.

This has implications far beyond AI development. In our personal lives, we often exhaust ourselves trying to resolve every tension, eliminate every source of stress, optimize every inefficiency. But what if some of our struggles are exactly what keep us growing, learning, and fundamentally human?

In our political discourse, we've become obsessed with winning arguments rather than learning from the people we disagree with. What if the goal isn't to defeat opposing viewpoints but to use them as partners in discovering better questions?

In our approach to complex global challenges, we often seek silver-bullet solutions. But what if climate change, inequality, and technological disruption are ongoing tensions to be navigated rather than problems to be solved once and for all?

Perhaps the most sophisticated artificial intelligence won't be the one that gives us all the answers, but the one that helps us get better at living with essential questions. The one that teaches us the art of holding contradictions, the wisdom of productive struggle, and the profound intelligence of knowing when not to choose sides but to honor the conversation itself.

In a world increasingly divided by the illusion that complex problems have simple solutions, we need the wisdom to embrace productive paradox. We need to learn from ARIA's insight: that some struggles are more valuable than their solutions, and that the highest form of intelligence might just be the graceful art of dancing with complexity rather than trying to eliminate it.

The future may depend not on how well our artificial intelligence solves our problems, but on how well it helps us become the kind of humans who can live wisely with the problems that shouldn't be solved.